Whitepaper on Zero Trust security for web apps

23 minute read

In this white paper

What is Zero Trust?

Zero Trust (ZT) is a cybersecurity model that emphasizes on verifying access to protected digital resources with zero assumed trust. Every request is verified with all available options regardless of whether the request is coming from an unknown or trusted network, or whether there is a human or a machine behind the request.

As recommended by National Institute of Standards and Technology (NIST), A Zero Trust approach is primarily focused on data and service protection but can and should be expanded to include all enterprise assets (devices, infrastructure components, applications, virtual and cloud components) and subjects (end users, applications and other non- human entities that request information from resources).

In this whitepaper, We’d primarily focus on understanding the Zero Trust approach to secure web applications (web apps) – which includes all kinds of hosted, server-side programs – microservices, API platforms, MVC apps, serverless functions and so on. While discussing the key tenets of Zero Trust approach, we’ll give real-world scenarios, cyber attacks and practical guiding principles and techniques to apply those tenets.

Why Zero Trust?

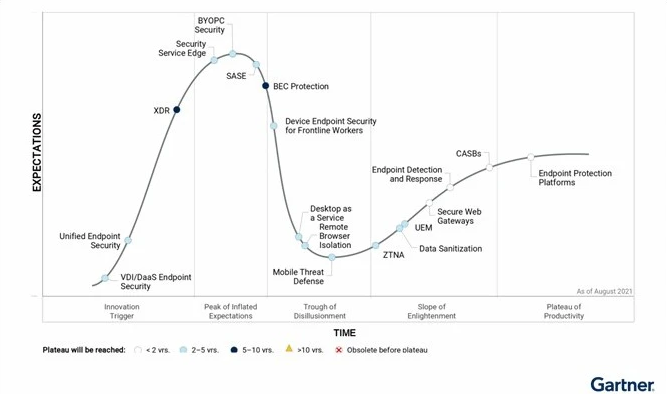

According to Gartner, interest in Zero Trust continues to outpace the broader cybersecurity market, growing more than 230% in 2020 over 2019. In another survey by VentureBeat done with chief information security officers in financial services and manufacturing, Zero Trust is mentioned as a business decision first.

There are several factors which has led to the significant increase in the importance of the Zero Trust approach in recent years, we’ll go through some of the major ones in the following sections.

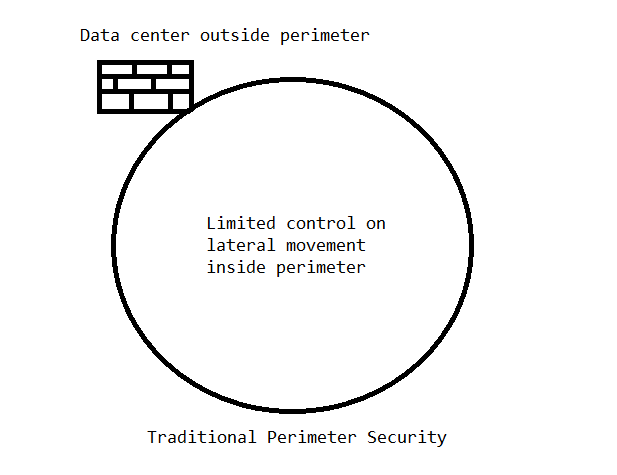

Perimeter-based security is no longer effective

Perimeter-based security – designating certain networks inside corporate network perimeters as trusted and secure – is becoming ineffective in current landscape of cloud computing and remote workforce. The COVID pandemic has multiplied remote working workforce to a level never-imagined-before. Many companies have gone fully remote or have committed to keep a significant portion of their staff as remote only even post pandemic as an HR policy. As observed in NIST’s ZTA document:

A typical enterprise’s infrastructure has grown increasingly complex. A single enterprise may operate several internal networks, remote offices with their own local infrastructure, remote and/or mobile individuals, and cloud services. This complexity has outstripped legacy methods of perimeter-based network security as there is no single, easily identified perimeter for the enterprise. Perimeter-based network security has also been shown to be insufficient since once attackers breach the perimeter, further lateral movement is unhindered.

This complexity in the enterprise has led to the development of the Zero Trust model.

Increased dependency on Third-party services and components has multiplied the attack surface

Enterprises are adopting SaaS services for even internal tooling and business workflows rather than building bespoke software because it’s cheaper and available on demand.

Public package managers like NuGet, NodeJs have gained a signnificant popularity amongst developers to build modern applications. These package repositories largely host free components and tooling from third-party vendors usually not known to the companies consuming the packages. Their public nature has also attracted attackers who consider it an easy opportunity to either find a vulnerability in some popular package, or take over some popular package maintained by single developer or a small team of developers (and introduce a vulnerability in it), consequently, penetrating their way into the enterprises via enterprises' automated build machines or direct take over of the production server, for example.

It’s obviously not possible to definitively verify, let alone control the security posture of the third-party SaaS services and packages from public repositories. Therefore, Zero Trust approach is needed to prevent or limit the exploit originating from these services and components.

Rise in sophistication and number of cyber attacks

Enterprises have been aggressively collecting more and more consumer data to analyze behavior and make personalized recommendations to improve online sales and generate new digital revenue. However, a dramatic jump in cyberattacks in recent years compromizing sensitive personal data of hundreds of millions of individuals has led to shake down of consumer trust.

As per KPMG’s recent “Corporate Data Responsibility: Bridging the consumer trust gap” report, 83% of customers are unwilling to share their data to help businesses make better products and services. Customers are not happy — 64% say companies are not doing enough to protect their data, 47% are very concerned their data will be compromised in a hack, and 51% are fearful their data will be sold.

To prevent or minimize damage from cyber attacks, companies need to adopt Zero Trust security model at the level of individual customer account, endpoints, identities and resources. This Zero Trust approach will also aid companies in providing full view of customer data and explaining how they are using it. The greater transparency will contribute to restoring the consumer trust.

Popularity of API-first approach has magnified data exposure to untrusted clients

Usually a customer record contains far more fields collected from various sources and events during the usage lifetime of the customer, but only a handful of it is needed to be shown for any given customer interface.

In the tradditional server-side rendering web applications, the customer data returned in the response usually contains only those fields which are needed for the particular UI – which is likely a small portion of the entire record – as part of the interface markup.

On the other hand, the API applications tend to return the entire customer record in the response for an endpoint, because it doesn’t have any idea about the needs of the UI – neither does the coupling makes any architectural sense, nor is it efficient to develop separate endpoints to serve needs of different clients.

However, as more and more server-side software are written using the API-first approach, and its consumption moves to untrusted clients like browsers and mobile apps, a new kind of threat has emerged – of leaking unintended sensitive data through clients on which user may not have full control of.

A Zero Trust approach is therefore needed to control the outflow of data in default circumstances, and establish privileges required to return additional data in the response.

Key tenets of Zero Trust security for web applications

A successful application of Zero Trust security requires thinking like an attacker and defending like a soldier. If you fail at either of these, you’ll end up leaving a weakness that a determined attacker will be able to find one day. Remember, the attacker needs only one weakness to penetrate into your fort, while you need to defend the entirety of it.

So, Zero Trust is not just an approach but a mindset. You can also develop this mindset by understanding and imbibing the major tenets of Zero Trust approach in your thought process. In the next few sections, we’ll go through these tenets.

Note

Zero Trust tenets mentioned below have been adapted to be particularly relevant to the developers so they can build hosted services which are secure by design. Read this post if you want to learn about the tenets of NIST’s Zero Trust Architecture (ZTA), which deals with the security posture of the entire enterprise.

1. Assume breach by default

While designing any interface or API, the default mindset is to think about the convenience of its consumer. While that’s all good and makes perfect business sense, you cannot ignore the fact that if a breach occurs, there won’t just be a great financial loss, but a reputational one too for the business. Consumer trust in your organization would be shaken and the growth prospect would get dimmer. Not to mention the adverse effect on the consumers whose sensitive personal data got compromised.

So, the first tenet of Zero Trust approach is to assume breach as the default phenomenon. During the design phase of any feature you should think “what if the attacker is the one making a request to this API? What should I do to protect it?” This question leads you to the second tenet.

When I was the Chief Security Architect of ISCP (security powered by ASPSecurityKit), on one occasion a business requirement came up in the team leads meeting. It was about building an endpoint to automatically check whether or not a financial account exists by the same person details (first/last names, SSN etc).

It was to be used on a publically available investor sign up form to prevent duplicate entries by the staff which had been happening atleast a few times a month. It’d be a great convenience for the staff, I concurred but, also highlighted the danger posed by having such a public endpoint which attackers could use to sniff out if some person had an account with us already.

I suggested some options to make the endpoint secure – including reporting about the result of the existence check only if the existing record was created with the same IP as that of the current request, in all other cases, it’d respond inconclusive. (The authentication wasn’t an option btw). Eventually, it was decided not to build the feature right away, rather, observe the logs if we were really getting that many duplicate requests which would warrant for such a potentially dangerous public endpoint.

2. Aggressively verify every request

Since you have zero assumed trust for the caller, it’s natural that you verify every request with all possible options to establish its legitimacy. You do not wave off any requirement just because you think the API is only for internal use or not documented so no one knows about it.

You do not leave God mode in your APIs – with a flag or a switch that only developers know – because eventually, the hackers will get to know about them too.

ASPSecurityKit’s security pipeline is built on this model: by default, it subjects every request to the entire series of security checks, which includes authentication, multi-factor, activity data authorization, IP firewall, account verification, Cross-Site Scripting (XSS) and so on.

Are there some guiding principles that can help in designing checks that better conforms to Zero Trust approach? Yes, I can site at least couple of them based on my experience below.

Absence of evidence should always mean, absence of access

An important guiding principle while designing better Zero Trust checks is that each check must require an explicit presence of the evidence to pass and not the other way round. For example, if you have a firewall feature, if there’s no IP in the white-list, you should assume no access and deny all requests rather than assuming full access (by any IP). This is how ASK’s IP firewall check works – it blocks the request from continuing if there’s no IP in the white-list.

In fact, all checks in the ASK’s security pipeline works on this important Zero Trust principle. For instance, ASK’s access control feature – ADA – requires that the authenticated identity must possess a unique permission for every action (web operation) by default. Developers can override this behavior for one or more actions by explicitly using the customization options provided, but this is the default.

Contrary to the Zero Trust principle, the ASP.NET’s AuthorizeAttribute – which is the primary mechanism to protect APIs in the built-in IAM ASP.NET framework – by default assumes full access to the authenticated identity if you don’t explicitly specify a role value as parameter to the AuthorizeAttribute on actions or controllers.

We can’t emphasize the importance of this principle enough. Zero Trust is primarily about Zero assumed Trust, and our security mechanisms must work on this primary principle to help developers make the right security choices during development.

How many checks are enough?

A key guiding principle in deciding as to how many security checks should be deployed for a particular action and identity is, the greater the impact or the significant the privilege, the greater number of checks are to be enforced. It doesn’t mean that low-impact/privileged actions/identities are given a free run; it only means that the higher impact/privileged actions and identities need to be verified with even more stringent security checks.

In March 2021, an IoT company – Ubiquiti which had sold 85 M+ cloud-enabled IoT devices – got hacked, with hacker getting root administrator access to all Ubiquiti AWS accounts, including all S3 data buckets, all application logs, all databases, all user database credentials, and secrets required to forge single sign-on (SSO) cookies – which basically means hackers had credentials to remotely access customers' IoT systems.

Such massive privileged access was made possible by one simple breach of the admin credentials, stored in LastPass (a password manager).

Admin credentials should always be protected by additional measures such as two-factor, and IP firewall. In ISCP, all site-to-site API keys were protected by the IP firewall check on production, even the ones used by the developers.

Just like ASK’s other checks, its IP firewall check is also applicable to user sessions and not just API keys; it means that the companies can even restrict high privileged accounts – like that of admins – to be operable only from known IPs/networks, which could be a secure VPN based static IP, enabling high privileged users to safely work from remote.

3. Grant least privilege access for the action

Suppose you need to pull some data to show in a dashboard from the API in an automated fashion. You have an option to create an API key, so you do that and put it in the pull-data-to-the-dashboard tool and congratulate yourself for the job well done. However, the API key has the same level of access as the privileged user it belongs to.

Clearly by doing so, you’ve violated an important tenet of Zero Trust: viz. Grant least privileged access required for the action. If an attacker somehow gets to the pull-data-to-the-dashboard tool, he’d grab the API Key and then obtain unfettered access to the API as you.

This isn’t an imagination: often internal tools used by the support/admin staff have such highly privileged access to the production systems. Hackers just need to get access to those internal tools – which they often achieve by social engineering – and they are able to penetrate into the production systems with ease.

Twitter’s own investigation of unprecedented July 16, 2021 attack – that resulted in numerous takeovers of high-profile accounts including those of President Barack Obama, then Democratic candidate Joe Biden, and Tesla CEO Elon Musk – revealed the following:

We detected what we believe to be a coordinated social engineering attack by people who successfully targeted some of our employees with access to internal systems and tools.

So, the third tenet of Zero Trust approach is least privilege access; that is, you should grant only those permissions, that you need to perform a certain action. In our example scenario, we just need to read some data into the dashboard – so we should grant access of only the read endpoints, to the API key.

In ISCP, we built integration with multiple services and followed this principle heavily. For instance:

-

While integrating with DocuSign, we leveraged a ServiceHMAC token and included the

documentIdas part of the embedded HMAC signature computation. This means that every callback URL was restricted to only the record it was created for, and any change in the value upon actual callback would not pass the integrity check of the ServiceHMAC scheme. Suppose if DocuSign was compromised, any adverse impact to our system would be limited to the operation and records that the callback URLs were pre-configured for. Additionally, the ServiceHMAC scheme adds an expiry to the signature token which could be from a few hours to a few days – depending on the workflow delay configurable for endpoints individually – which meant that most of the callback URLs older than a few days were automatically rendered as invalid. -

While integrating with ZenDesk for support staff, we leveraged ServiceKey and granted permits to only those

GETendpoints that the staff needed to fetch customer data on the Zendesk’s dashboard, to effectively serve the customer support requests.

4. Limit the amount of data return in the API response

In a prior section, we’ve learnt that the increase in the popularity of writing server-side software using the API-first approach has given rise to a threat of leaking unintended sensitive data via the untrusted clients.

In the recent massive leak of hundreds of millions of Facebook and LinkedIn user profiles, data scraping via the public APIs have been observed as the major cause and, the data dump also includes personal information such as mobile numbers, email addresses and geo locations of the users which are not visible on the site to the users. Clearly, APIs were returning more data than what users needed to see and hackers exploited this vulnerability.

So, the fourth tenet of Zero Trust approach is to limit the data returned by default in the API responses to the minimum possible, and require user consent or additional privileges to return additional data.

In ISCP, I had architected a security filter that we hooked into the data-to-DTO mapper which would decide whether or not to return the detailed client view. By default, a summary view was returned – that had only basic details of the client while the detail view had richer details, meant for the employee coming from the employee portal. Despite almost all users of the system having access to the ‘get clients’ API, only users having specified role (designated via an ADA permit) and calling from the specified portal would get the detailed view.

Please note that the portal check was primarily for the sake of efficiency (so as to avoid returning unnecessary additional data if the employee making a call through the client portal), as making access decisions based on anything that the calling client can easily spoof doesn’t qualify as a security measure, let alone considering it as a possible Zero Trust one.

5. Detect and report threats by continuous logging and monitoring

Regardless of how good your current Zero Trust security posture is, if you don’t log, monitor and analyze requests made to your web apps, you’re not doing the Zero Trust security properly. Zero Trust approach emphasizes on continuous monitoring and analysis, so as to recognize and mitigate such threats as compromised accounts, under penetration attacks, and other threats.

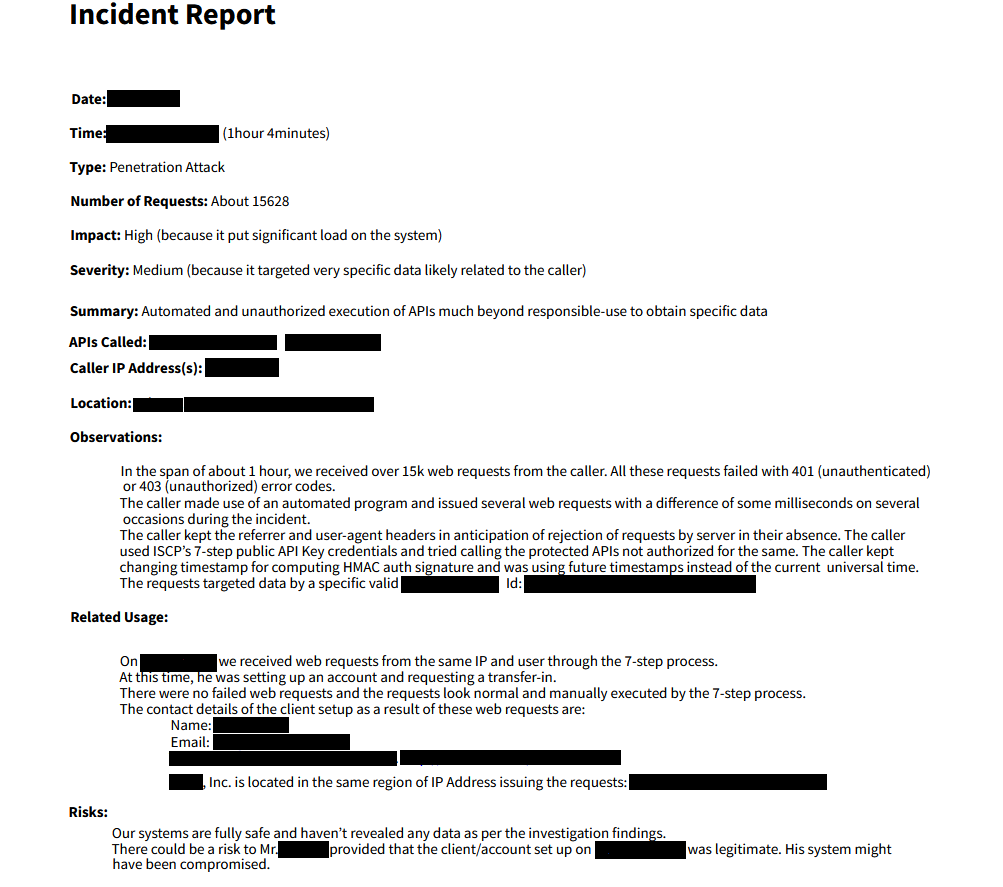

In ISCP we were logging all requests (without body) to Kibana on prod, and we had dashboards created for security related errors such as ‘403 - unauthorized’, ‘401 - unauthenticated’ and so on. A few of the interesting incidents I saw are given below:

-

On one occasion in Feb 2019, we discovered 15k+ requests had been made in about an hour to endpoints meant for our investor sign up public form from one IP. It wasn’t humanly possible to issue that many requests from a browser so we assumed malice. Upon investigating further, I found a successful investor sign up form submission about 8 days ago from the same IP. It was possible that the client machine was compromised.

ISCP security system powered by ASPSecurityKit successfully defended the attack. In fact, features like timestamp based expiry of HMAC frustrated the caller as many requests just failed because the caller tried using future timestamps.

The attacker possessed a valid recordId of a transfer request issued for the successful sign up happenned 8 days ago from the same IP. Along with this Id, the attacker made use of public API key credentials and attempted to call protected APIs, but as ISCP followed ASK’s least privilege access principle, those errors resulted in 403 - unauthorized errors.

I made an incident report and shared it with the legal team, to make way for the next steps including informing the client. A snapshot of the report is given below (identifying information – including of persons and machines – obfuscated to protect the privacy of our client, Forge Trust.)

-

On another occasion, we discovered several 403 failures of APIs related to the investor sign up form – called 7-Step. As stated above, these APIs were protected with public API key credentials which included origin domain validation pseudo protection. It is pseudo because as already mentioned, our security decisions should never be dependent upon any value sent by the client that can be spoofed easily. The ORIGIN header is a value that browser sends but can easily be spoofed. However, in this case it was particularly useful, because the attacker had setup a phishing site with our 7-Step plugin which was making calls to our public APIs using the public key (included part of the plugin code). The APIs were failing because only designated domains were allowed to use a particular public key, and each of our vendors had their own keys with unique white-list of their respective domains where they had setup the plugin.

A phishing attack does depend on the legitimate users executing the required request such as filling a form with account/personal details – and modern browsers do not allow changing of ORIGIN header via JavaScript. Hence, the requests were sending the attacker’s phishing domain and our security layer rightfully blocked the requests.

Another incident report was prepared and sent to the legal team and I also emailed to the hosting service provider upon whose server the phishing site was hosted.

-

Infrequently we also witnessed request failures caused by such features as IP firewall denying non-white-listed IPs, which led us to build automated failure notification (as email) to the vendors (so they can confirm if their sensitive site-to-site API key was compromised).

Then there were several frequent 403 failures because one of our vendors had an automated tests suite that would invoke APIs with invalid Ids and the ADA check would promptly deny such requests.

In all the above real-world incidents, following two points can be consistently observed:

- The Zero Trust mindset coupled with a pure Zero Trust based security framework like ASPSecurityKit can really help you defend against most – if not all – kinds of cyber attacks.

- Aggressive logging and continuous monitoring of requests help you detect threats as they are occurring and take actions to mitigate them, including building automated notification features, hardening your security posture and reporting to the legal team.

6. Ensure security of the application environment

As explained in a prior section, dependency on third-party services and packages is increasing in enterprise systems and software. A vulnerability in a popular package, can render dependent enterprise systems vulnerable to critical issues such as remote code execution or private memory data leak, for example.

Whether it was the infamous Heartbleed bug in hugely popular OpenSSL library, which was believed to have rendered some 17% (around half a million) of the Internet’s secure web servers certified by trusted authorities to be vulnerable to the attack, allowing theft of the servers' private keys and users' session cookies and passwords. Or, a critical remote code execution (RCE) vulnerability in Apache Log4j – a popular Java logging framework, which made a huge number of applications, frameworks and cloud services using the Log4j framework vulnerable. It’s clear that the Zero Trust thinking doesn’t stop with just your code; it must extend to cover the safety of the environment as well.

Some recommendations and best practices in this regard are:

- Keep your packages up-to-date. Especially, keep a watch on release of security patches by subscribing to the notification feature of your package manager or something similar.

- Prefer packages that have gone through a security audit over ones without it. Refrain from using obscure packages. It’s true that popular packages aren’t immune to exploitation, but they are also quick to get patched for the same reason that they are popular. An obscure or little-known package might just carry the vulnerability for longer period of time, before anyone even notice it. Or, it might get taken over by the hacker and the original author has already moved on to something else, so again, no one notices the transfer of ownership.

- Turn off features you don’t need from a package through its configuration (if it provides such settings) and/or see if you can load the package in a lower trust mode rather than full trust.

- Use signed packages and include verification of signature of every package in your build and verification step.

- Create and safely store in a read-only configuration the MD5 hash of every package binary once you have installed/upgraded a version; verify the binaries using this hash as part of your build and verification system.

- Do not push packages from public package repositories to the production or any other environment with real customer data. Maintain a local repository to ensure a safe availability of the verified versions locally.

- Keep sensitive production environment settings (API Keys/passwords/connection strings) in encrypted form or at least not accessible to everyone having access to the code.

- Block random outbound internet access on your servers. Most web applications do not at all need to initiate requests to random servers on the internet. Hence, using firewall rules, limit outbound access to only a pre-defined white-list of hosts or IP ranges the web apps need to connect with.

- Using the firewall rules, block all inbound ports on the servers except the ones your web apps listen to for the incoming requests.

- Audit access to the production database and do not grant its access to everyone within the team.

- Use read-only users to access production database as much as possible, so as to avoid accidental/intentional modification to critical data.

- Make sure unintended directories and files aren’t exposed from your site’s public folder. For example, the

.gitfolder of a Git repository shouldn’t be a part of the deployment, or at least public access to it should be denied using your web server’s access control lists (ACLs). Otherwise, you risk exposing your private source code.

Zero Trust: our end-to-end approach for SDLC

It’s evident that Zero Trust enabled services and software have emerged the best way to solve the current and future threats in the world of cybersecurity, chiefly because it proposes a new way of thinking about security based on zero implicit trust.

We’re the only security solutions provider in the market with a complete offering that helps you rapidly develop business web applications and APIs on .NET based on Zero Trust security. Our solution includes:

- A security framework – ASPSecurityKit(ASK) – for .NET designed from scratch based on Zero Trust approach. A proven framework, ASK has been protecting, amongst others, Forge Trust’s investor platform that manages 1.3 M+ accounts and $13 BN+ assets under custody.

- Hands-on workshops and training for your development staff on the end-to-end application of Zero Trust security which involves the ASPSecurityKit framework and other tools, best practices and mindset.

- Docs/resources for continuous learning, security analysis/review of projects and on-going support to help you win more projects.

- Analyze existing products for Zero Trust compliance and provide actionable recommendations and implementation services to fill the gaps quickly.

We’d be more than happy to give a quick presentation with more details to your leadership and tech team in this regard. Send us an email at [email protected] or call us on +91 (888) 633-3058.

The Zero Trust Thinking series

We’ve started the Zero Trust Thinking (ZTT) series to unravel Zero Trust security model by hands-on samples, articles and videos in an easy-to-understand developer terminology, thereby helping developers pick up and build the Zero Trust mindset.

We believe that if security concepts, attacks and defence techniques are demystified in simple language, it’s easy for developers to develop Zero Trust thinking – and if every developer codes with this Zero Trust thinking, we’d be living in a cyber world much safer for everyone.

ZTT also gives us an opportunity to showcase our Zero Trust security framework – ASPSecurityKit (ASK) – which we consider to be by far the best and only true Zero Trust security framework available for .NET web applications and services. ASK is a proven framework securing systems like Forge Trust’s ISCP (a financial platform with 1.3 M+ accounts and $13 BN+ assets under custody).

Credits

- Thanks Abhilash for reading drafts, preparing images and other assistance.

- “Security hype cycle” image credit : Gartner.

- “Sophisticated cyber attacks” image created using www.freepik.com.

Need help?

Looking for an expert team to build a reliable and secure software product? Or, seeking expert security guidance, security review of your source code or penetration testing of your application, or part-time/full-time assistance in implementation of the complete web application and/or its security subsystem?

Just send an email to [email protected] with the details or call us on +918886333058.